Top 10 Most Promising AI Projects to Watch in 2021

What are the groundbreaking Machine Learning and AI projects that will likely change the existing AI/ML landscape and bring their own rules of the game?

Leading corporations in China, US and Europe spend billions on creation of the so-called Strong AI, capable to fully replace human beings. In 2020, however, this controversial technology still did not emerge as described in our previous blog post that also covered the most important AI developments of the last year.

Disrupting ML/AI projects and technologies, however, enable smart companies around the globe to be more agile, competitive and cost-efficient. ImmuniWeb keeps track of recent advances of Machine Learning and AI industry, while continuously improving its own award-winning AI/ML technology for application security testing, attack surface management and Dark Web monitoring.

Below are the most interesting, promising or otherwise important ML/AI projects, researches and trends we suggest to watch in 2021 to stay up2date with the industry:

1. Transformers

Transformers, a type of deep learning models, have been enabling many research efforts in AI recently. They revolutionized text processing by, first, improving its quality and, second, by simplifying it. They take a text as an input and produce another text sequence as output, for example, to translate an input English sentence to German.

They are based on Attention, a special algorithm that helps to spot the most important words in the input and make sure that they are processed correctly while creating the output text. Another main component of transformers is a stack of Encoder layers and Decoder layers. As their names suggest, they are responsible for encoding the input text and decoding it into a desired output.

2. Big size language models

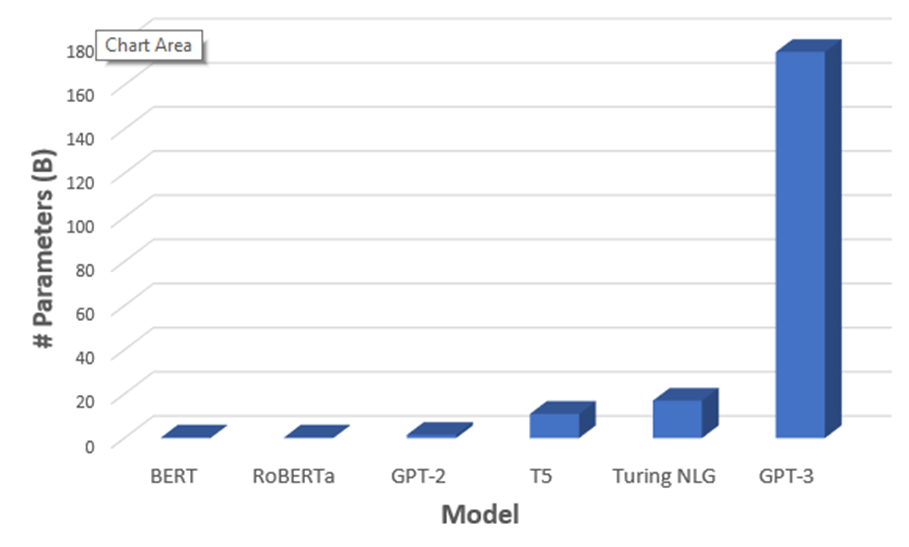

One of the biggest news in AI in 2020 was GPT-3, Generative Pre-training Transformer by Open AI. It is a gigantic pre-trained language model with 175 billion parameters, 10x more than that of Microsoft’s Turing NLG, the previous leader.

It was trained on a huge amount of web texts, books, and Wikipedia. As a result, the model is task-agnostic, which means that it is capable of doing a lot of things without any re-training or fine-tuning:

- Language Translation

- Text Classification

- Sentiment Extraction

- Reading Comprehension

- Named Entity Recognition

- Question Answer Systems

- News Article Generation, etc.

However, it still has a number of problems:

- Repetitions

- Coherence loss

- Contradictions

- Drawing real conclusions

- Multiple digit additions and subtractions

Although the model shows stunning results, not everyone is so much excited about it. Some researchers note, that the generator-based approach is a bit too simplistic compared to the more advanced architectures and all the amazing results were achieved by leveraging the “brute force” of huge data and the number of parameters used while training.

But there is one thing that keeps interest of many to this project. This is the third generation of GPTs, and all of them showed linear growth of performance on different tasks. So, the question is: if the next generation of GPT (GPT-4?) uses even more data and more parameters, will it be able to amaze us even more?

3. BERT and friends

Bidirectional Encoder Representations from Transformers (BERT) is an encoder based on the transformer architecture. Most of BERT’s predecessors were able to capture context in either a leftward or rightward direction. For example, GPT-X models predict only the last word in a sequence (based on the preceding context only).

Authors of BERT introduced random masking of words, which enabled bidirectional models: the models that make use not only of preceding context, but also of words that follow the masked one.

This approach achieved the state-of-the-art on many tasks and gave birth to a whole family of BERT-ish models:

- RoBERTa (Robustly Optimized BERT Approach): some tweaks in the model pretraining process improved performance on some tasks.

- BART (Bidirectional and Auto-Regressive Transformers) used for document denoising (correction). This variant of BERT was trained on corrupted texts and their correct version. The authors found BART performing extremely well on most tasks.

- DistilBERT is a version of BERT that they claim is 40% smaller and 60% faster while retaining 97% of BERT’s language understanding capabilities.

4. T5

In 2019 A Google research team recently published the paper Exploring the Limits of Transfer Learning with a Unified Text-to-Text Transformer, introducing a novel “Text-to-Text Transfer Transformer” (T5) neural network model.

T5 is an extremely large neural network model that is trained on a mixture of unlabeled text and labeled data from popular natural language processing tasks, then fine-tuned individually for each of the tasks that the authors aim to solve. It works quite well, setting the state of the art on many of the most prominent text classification tasks for English, and several additional question-answering and summarization tasks as well.

5. Embeddings

Embeddings are mappings of words (or other sequences) to vectors. Recently, vector representations of word semantics have become popular among researchers because embeddings provide much more information compared to the traditional ways of linguistic data representation. Embeddings, in the most basic form, encapsulate information of the most frequent contexts a word occurs.

It is not a new project or technology, but with transformers, embeddings have become able to capture even more information. Initially, one word had only one embedding, that included all possible contexts. So that a vector for word table would reflect meanings of table as piece of furniture and table as a data structure.

With big models like BERT now it is possible to have contextualized word embeddings, so that different meanings of table would have different vectors.

6. Hugging Face

NLP-focused startup Hugging Face released a freely available library (called Transformers) which enables programmers and researchers to easily use and fine-tune a lot of currently available Transformers.

The Transformers package contains over 30 pretrained models and 100 languages, along with eight major architectures for natural language understanding (NLU) and natural language generation (NLG):

- BERT (from Google);

- GPT (from OpenAI);

- GPT-2 (from OpenAI);

- Transformer-XL (from Google/CMU);

- XLNet (from Google/CMU);

- XLM (from Facebook);

- RoBERTa (from Facebook);

- DistilBERT (from HuggingFace).

The unified way of using different transformer models greatly facilitates research and AI application development.

7. Facebook’s PyGraph

Transformers are used mostly for text (or any other sequential data) processing. But what about other types of data structures?

Recently, Facebook open-sourced PyTorch BigGraph, a new framework that helps produce graph embeddings for extremely large graphs in PyTorch models.

Graphs are one of the main data structures in machine learning applications. “Specifically, graph-embedding methods are a form of unsupervised learning, in that they learn representations of nodes using the native graph structure. Training data in mainstream scenarios such as social media predictions, internet of things (IOT) pattern detection or drug-sequence modeling is naturally represented using graph structures. Any one of those scenarios can easily produce graphs with billions of interconnected nodes.”

8. Neural Architecture Search

Is it possible to create an AI that creates AI? It may sound unrealistic (or too threatening, depending on your stance towards the idea of the AI conquering the world), but it is something that researchers are working on.

For example, GPT-3 can create some code in HTML/CSS or even build a simple neural network. But it is rather simplistic and can be used to help coders automate some routine operations, not as a production-ready solution.

However, Neural Architecture Search (NAS) is a system that tries to find an optimal neural network architecture to a given dataset. “Much of a machine learning engineer’s work relies on testing some of the trillions of potential neural network structures based on intuition and experience, and NAS can considerably reduce that cost. NAS uses AI to create better and new AI through intelligent searching of potential structures that humans have never thought of. It’s not generalization, it’s discovery.”

9. AI for Cybersecurity Applications

There is no any common AI technology that aims cybersecurity specifically, but solutions described below can help security experts in many ways:

- Anomaly detection — with AI it is possible to detect and track unusual activities that may be a sign of malicious activity;

- Misuse detection — AI may be trained to detect not just any anomaly, but rather specific types of activity that may be attributed to security breaches;

- Data exploration helps keep track of complex infrastructure that many companies have. Tracking numerous data assets and revealing their connections may help visualize the infrastructure and help security analysts by increasing the ‘readability’ of incoming requests.

- Risk assessment helps to spot the “weakest” points a company’s infrastructure as well as to estimate the overall “hackability” of it.

10. Augmented Intelligence

Still, the AI has its limits. In many respects artificial intelligence lacks flexibility and insights of human intelligence. The recent rise of AI is mostly based on its ability to do some intelligent operations much faster than a human can do, not better.

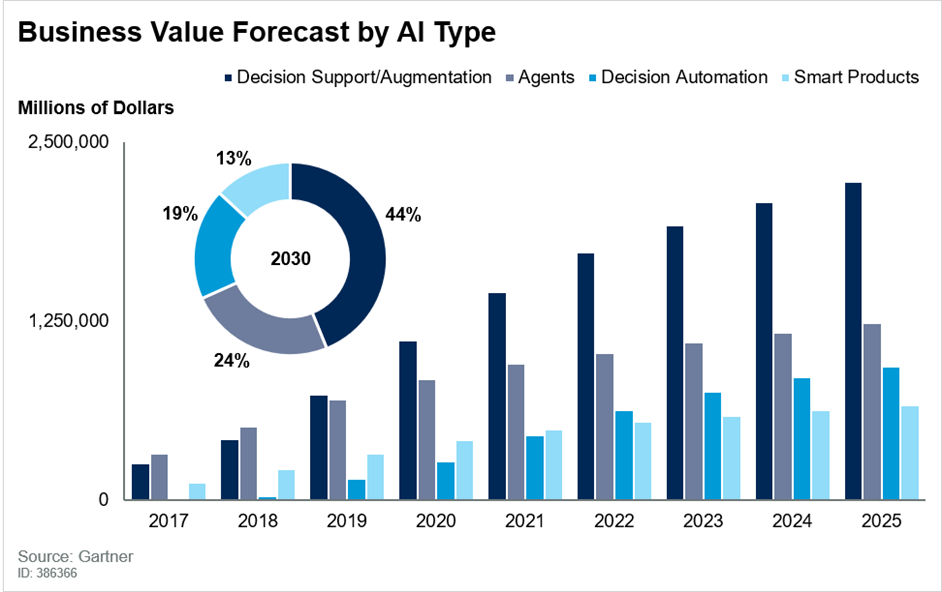

The idea to make the two types of intelligence work together gave birth to Augmented Intelligence. “Augmented intelligence is all about people taking advantage of AI. As AI technology evolves, the combined human and AI capabilities that augmented intelligence allows will deliver the greatest benefits to enterprises,” said Gartner Research VP Svetlana Sicular.

Looking at the chart (below) that Gartner has created, two things are quite clear:

Augmented intelligence grows at a rapid rate as indicated by the slope of the curve, and Although AI-powered decision automation is in its nascency right now, it’s set to rise quickly dwarfing the growth of smart products in a few short years.

By 2023, Gartner predicts that around 40% of infrastructure and operations teams in large enterprises will adopt AI-augmented automation for increased productivity.

ImmuniWeb uses this paradigm in AI solution development: we augment the expertise of our auditors with the power of the most recent AI technology.

Conclusion

2021 is poised to be the most vivid year for practical advancement of AI/ML technologies, capable to support sustainable development of humanity.

The innovation, however, comes at a price: new possibilities bring new risks, and companies embracing the cutting edge of emerging technologies should be prepared to deal with them. One way to approach these novel risks is proposed by Gartner in MOST Framework. The framework offers a systematic approach to AI-related risk management by defining threat vectors, types of damages and proposing risk management measures.

At ImmuniWeb, in 2021 we also expect more malicious usage of Machine Learning by cybercriminals that will, however, not create any substantially new hacking techniques but merely accelerate and finetune the existing attack vectors.

We also believe that cybersecurity industry will likewise gain a solid advantage over the bad guys by wisely implementing AI/ML into its products, while being cautious about economic practicality and the abovementioned risks of AI.